As a fast-growing tech company, we recently found ourselves inundated with a staggering number of applications for a QA Engineer position. Within just 60 hours, we received an overwhelming 180 applications, making the task of manually reviewing each one a daunting challenge. Faced with this predicament, we turned to the power of Large Language Models (LLMs) to help us efficiently evaluate the candidates and identify the most promising ones.

The Challenge: Reviewing 180 Applications in a Timely Manner

With such a large influx of applications in a short span of time, our small team quickly realized that manually reviewing each one would be an extremely time-consuming and arduous task. We needed a more efficient solution that could help us streamline the evaluation process without compromising the quality of our candidate assessment.

The Solution: AI-Powered Application Reviewer

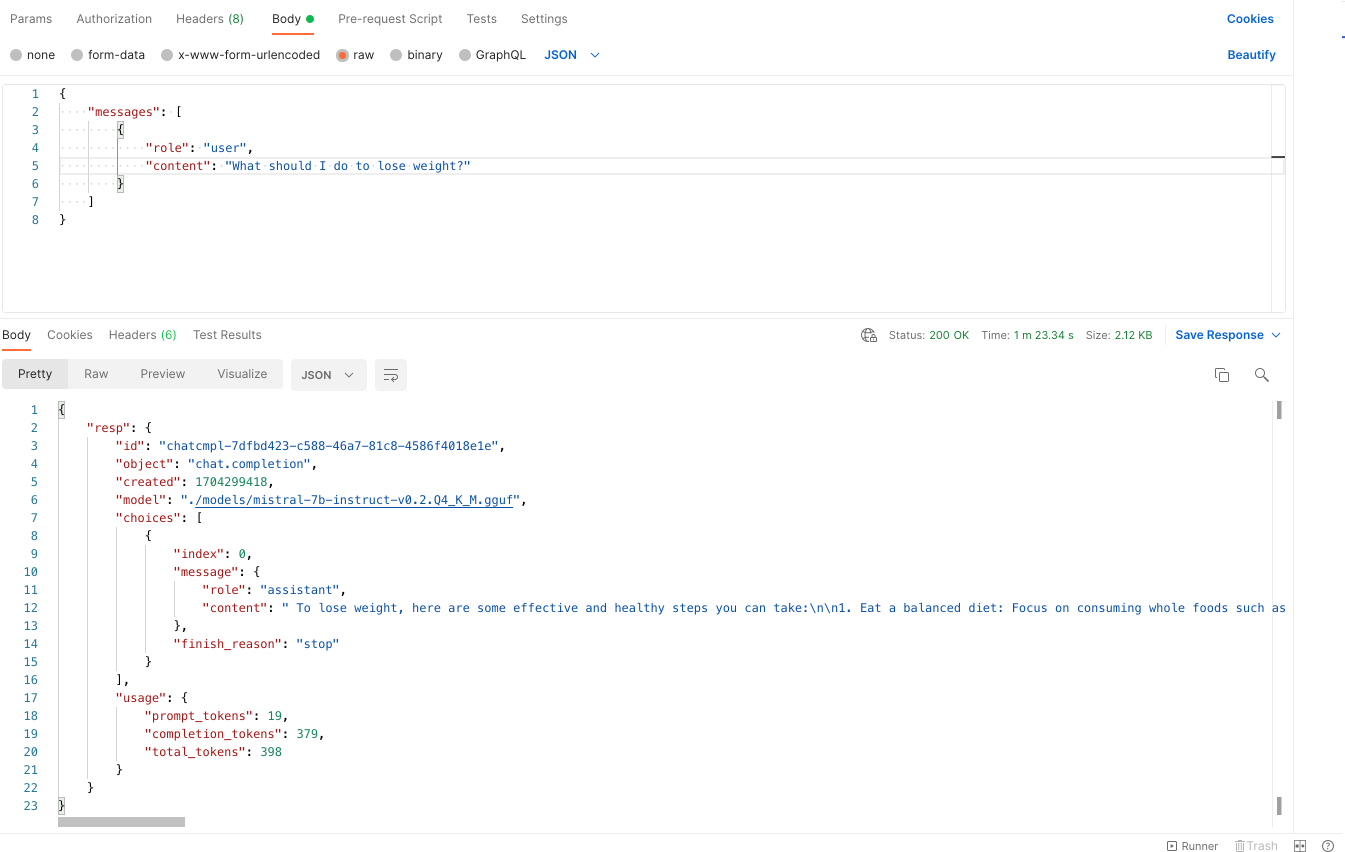

To tackle this challenge, we developed an AI-powered application reviewer that leveraged the capabilities of the Mistral AI model. This tool was designed to analyze candidate responses, provide insights, ratings, and recommendations for each applicant, enabling us to quickly identify the most qualified candidates.

Checkout the Github: https://github.com/sauravpanda/applicant-screening

How It Works

-

Data Preparation: We compiled all the applicant data into a CSV file, ensuring it was ready for processing.

-

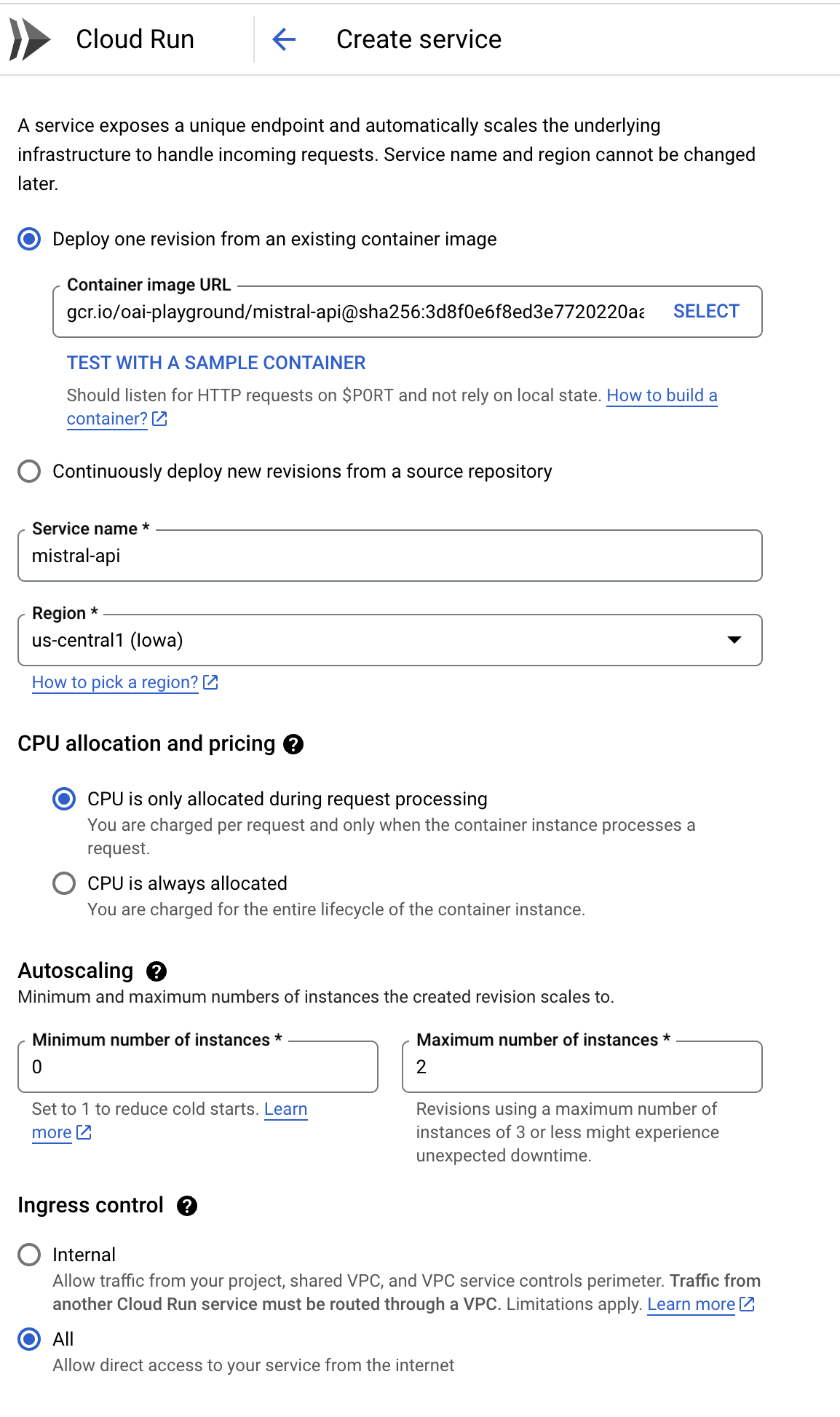

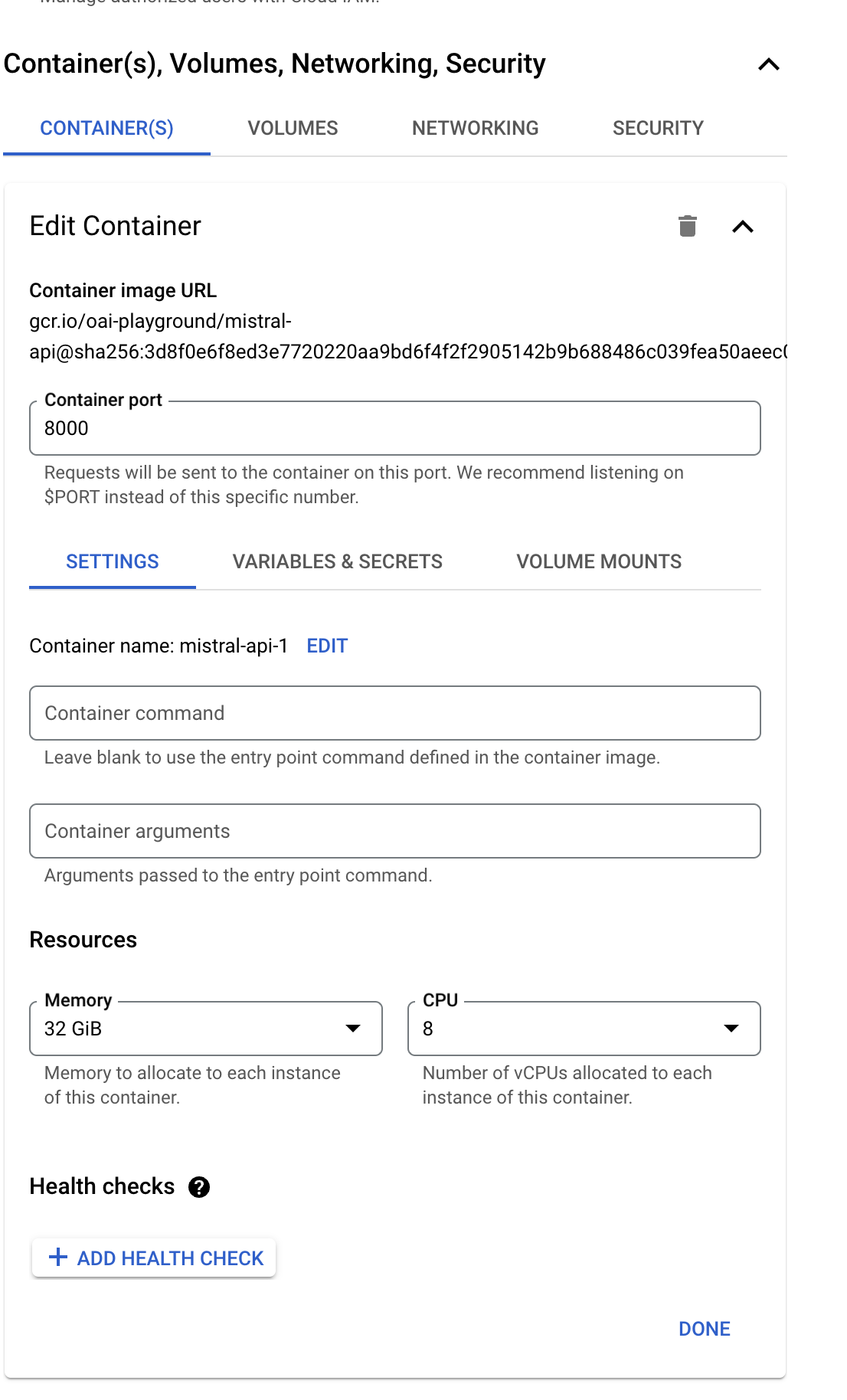

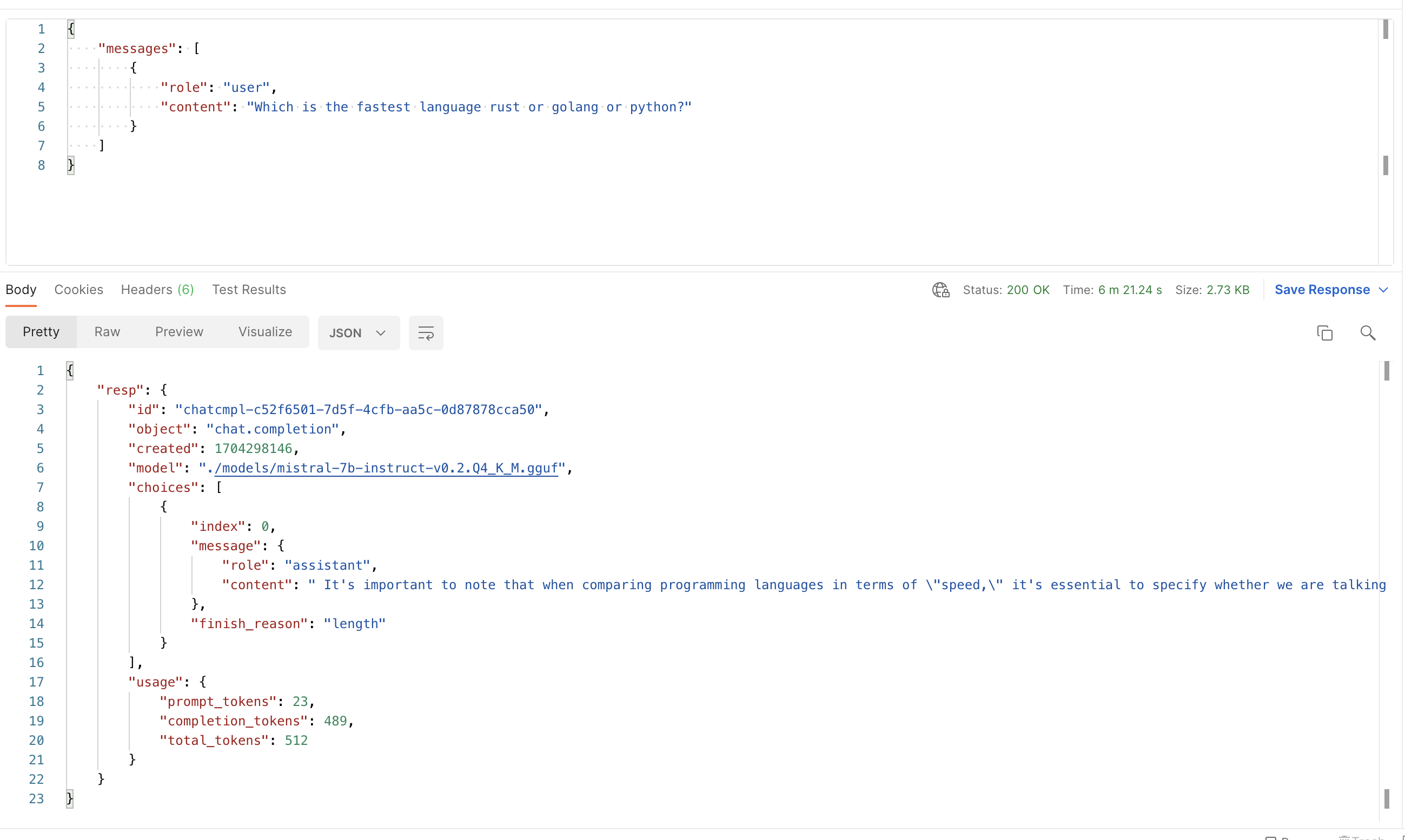

AI Model Integration: Our script utilized the Mistral AI model to analyze each candidate's responses, considering various factors such as their qualifications, experience, and problem-solving abilities.

-

Customizable Evaluation Criteria: We defined specific evaluation criteria based on the requirements of the QA Engineer role, ensuring that the AI model focused on the most relevant aspects of each application.

-

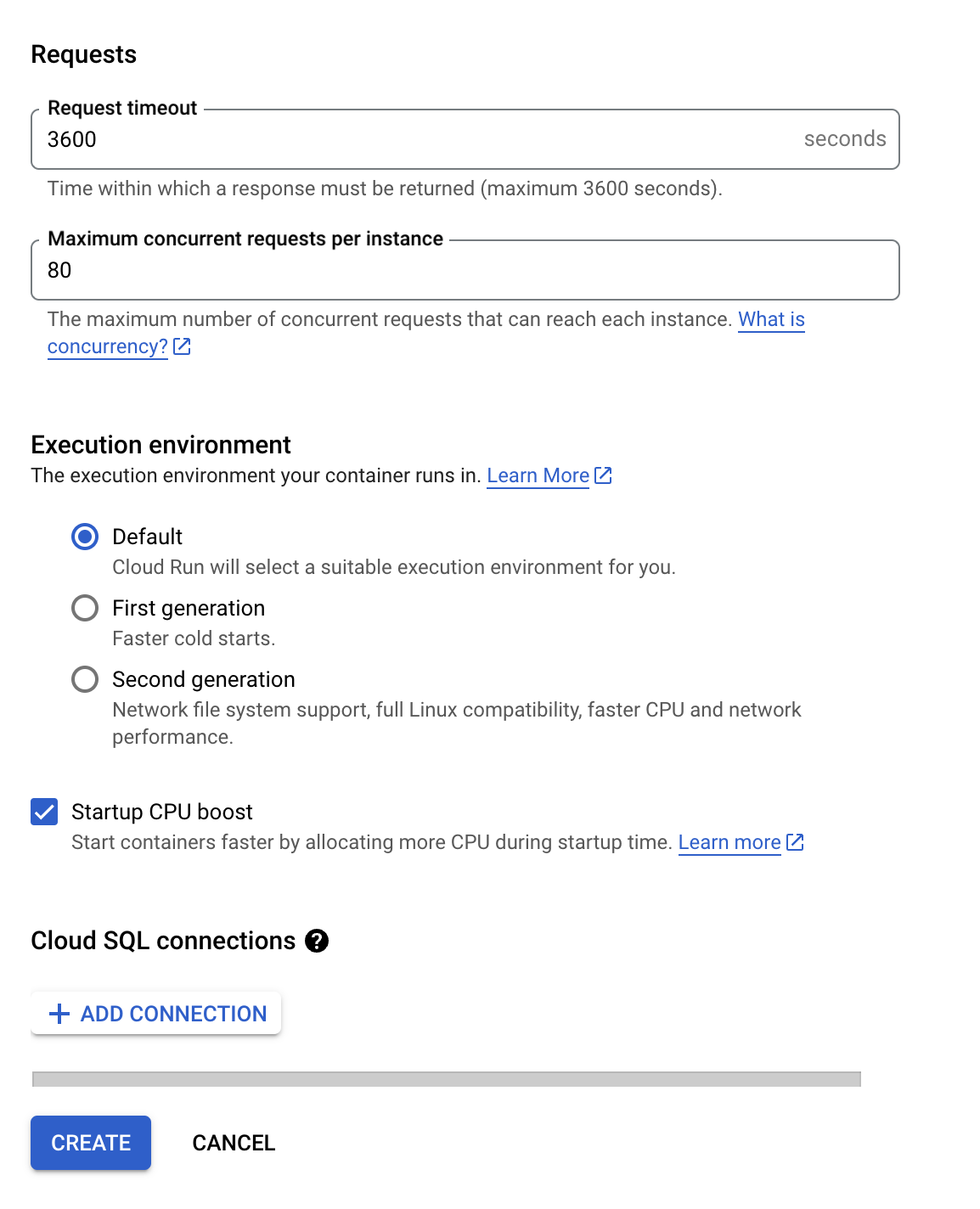

Automated Processing: The script processed each application using the Mistral AI model, generating feedback, reviews, interview recommendations, and ratings for each candidate.

-

Progress Tracking: To monitor the progress of the evaluation process, we integrated the tqdm library, providing us with a visual representation of the task's completion.

-

Results Consolidation: Finally, the script saved the processed data, including the AI-generated feedback and recommendations, into a new CSV file for our team's review.

-

Execution Steps:

- Clone the repository:

git clone https://github.com/sauravpanda/applicant-screening.git - Install the required packages:

pip install pandas litellm tqdm kaizen - Update the job post description in

jobpost.py. - Modify the

CONDITIONSvariable in the script to set specific evaluation criteria. - Prepare your CSV file with applicant data.

- Run the script:

python main.py - When prompted, enter the name of your CSV file (with or without the

.csvextension).

- Clone the repository:

The script will process the applications and save the results in a new CSV file prefixed with "updated_". Additionally, it will provide a summary of the processing, including the number of applicants processed and token usage statistics.

The Impact: Efficient Candidate Evaluation and Time Savings

By leveraging the power of AI, we were able to significantly streamline the candidate evaluation process. Instead of spending countless hours manually reviewing each application, our AI-powered tool allowed us to quickly identify the top 20 candidates we were interested in, based on the AI's recommendations and ratings.

This innovative approach saved us a tremendous amount of time and enabled us to evaluate candidates more objectively and consistently. The AI model's ability to analyze responses and provide insights helped us assess candidates on different subjective criteria, ensuring a fair and comprehensive evaluation process.

Moving Forward: Embracing AI in Hiring Processes

The success of our AI-powered application reviewer has highlighted the immense potential of integrating AI technologies into hiring processes. As we continue to grow and attract a larger pool of applicants, we plan to further refine and expand our AI-driven approach, ensuring that we can consistently identify top talent while maintaining efficiency and objectivity.

By embracing the power of AI, we are not only streamlining our hiring processes but also positioning ourselves as an innovative and forward-thinking company that values technological advancements and their practical applications.